Breaking the AI Trust Wall: Why Explainability in AI Is the Future of Smarter, Safer Insurance

Breaking the AI Trust Wall: Why Explainability in AI Is the Future of Smarter, Safer Insurance

Ever found yourself questioning an AI-driven decision at work—something that seemed accurate, but you couldn’t explain why? If so, you’re not alone. Across the U.S., insurance professionals are running headfirst into what many call the “AI trust wall.” Automation has revolutionized claims processing, underwriting, and risk analysis, but there’s a growing problem: when artificial intelligence makes a decision, it often can’t explain its reasoning in human terms. And in an industry built on trust and accountability, that’s a serious issue.

The Rise of Explainability in AI

Explainability in AI—or XAI—refers to the ability of machine learning models to clearly communicate how and why they make certain decisions. In traditional AI systems, decisions are often made within complex “black boxes” of data and algorithms. While these systems might be accurate, their lack of transparency can create major compliance, reputational, and financial risks.

In the American insurance industry, this problem is no longer theoretical. When an automated underwriting model denies a claim or adjusts premiums based on unclear logic, regulators and customers demand answers. Companies that can’t produce those answers face fines, audits, and eroding trust.

The Real Cost of Opaque AI

Consider a large Midwest insurer that had to spend nearly $700,000 and six months rebuilding its legacy AI systems because state regulators questioned how certain claim decisions were made. The models worked—but no one could explain the “why.” It’s a cautionary tale: what you save in automation today could cost you in compliance tomorrow.

Beyond compliance, opaque AI also slows down internal adoption. Underwriters and adjusters often resist fully automated systems when they don’t understand the reasoning behind them. The result? Manual overrides, delayed processes, and a loss of the very efficiency that AI was meant to create.

XAI as a Competitive Advantage

Forward-thinking carriers are realizing that explainability isn’t just a regulatory checkbox—it’s a business strategy. Explainable AI enables insurers to trace model logic, verify fairness, and maintain documentation that satisfies both regulators and customers.

For example, in property and casualty insurance, XAI helps clarify how different risk variables influence pricing. In health insurance, it ensures that coverage recommendations are based on transparent data factors, not hidden biases. And in auto insurance, XAI enhances trust by showing customers why their premiums increase after specific claims or driving patterns.

New Tools and Techniques in Explainable AI

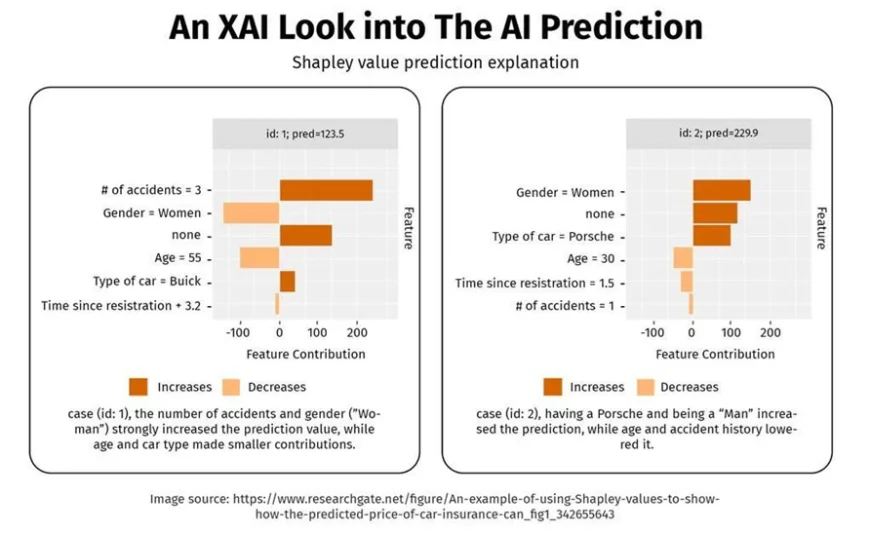

The technology behind XAI is evolving rapidly. U.S.-based insurtechs and analytics firms are integrating tools such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), which reveal how individual data points influence AI decisions. These techniques help bridge the gap between data scientists and business users by translating complex model outputs into simple, actionable insights.

Moreover, some carriers are now implementing AI governance frameworks that require model explanations as part of deployment. These frameworks standardize documentation, reduce human bias, and provide traceability for every AI-driven outcome—a crucial step in satisfying both auditors and policyholders.

The Road Ahead: Balancing Speed and Transparency

There’s no question that AI will continue to shape the insurance landscape in the coming years. The challenge is ensuring that innovation doesn’t outpace understanding. As U.S. regulators, including the NAIC (National Association of Insurance Commissioners), tighten their oversight on automated systems, companies that invest early in explainability will stand out as industry leaders—not laggards forced to catch up.

Explainability in AI is not about slowing innovation—it’s about making it sustainable. It ensures that automation enhances human decision-making rather than replacing it blindly.

Final Thoughts

The next era of insurance will belong to carriers that can both automate and articulate. Explainable AI isn’t just a safeguard—it’s a bridge between technology, compliance, and human trust. In a market where credibility and accountability matter as much as speed, XAI is more than a buzzword. It’s the future of responsible automation.