A Beginner’s Guide to Hyperparameter Tuning

Learn how hyperparameter tuning improves machine learning models by optimizing settings for better accuracy and balanced performance.

Machine learning models rely on many factors to perform well, and one of the most important is hyperparameter tuning. If you are just starting out in data science and want to master these concepts, enroll in the Data Science Course in Mumbai at FITA Academy to get hands-on training and expert guidance. This guide will help break it down in simple terms.

What are Hyperparameters?

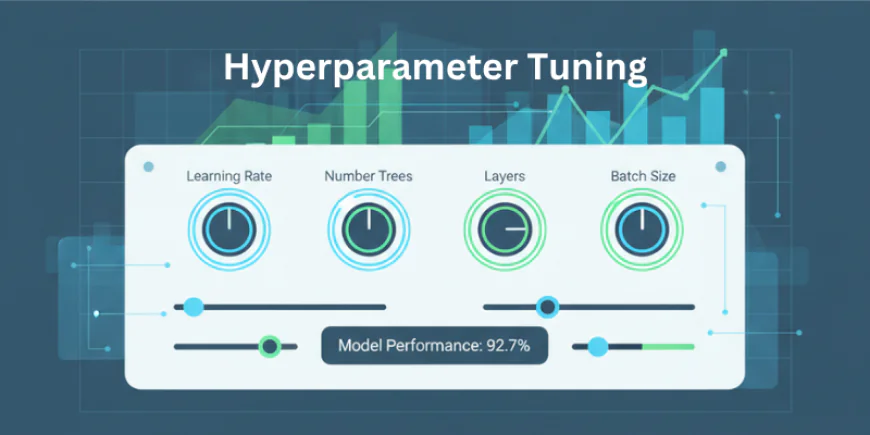

Hyperparameters are settings you choose before training a machine learning model. These are not learned from the data but are manually set by the person building the model. Common examples include the number of trees in a random forest, the learning rate in gradient boosting, or the number of layers in a neural network.

Think of hyperparameters like the knobs on a machine. Turning them in different ways can affect how the machine performs. In machine learning, the right combination of hyperparameters can greatly improve model accuracy, while the wrong setup can lead to poor results.

Why is Hyperparameter Tuning Important?

When a model is first trained, it uses default hyperparameter values. These defaults may not be the best choice for your specific dataset or problem. By tuning hyperparameters, you can improve how well the model fits the data, reduce errors, and make better predictions. To learn these skills in depth, consider joining a Data Science Course in Kolkata for expert guidance and practical experience.

Hyperparameter tuning helps prevent both underfitting and overfitting. Underfitting occurs when the model lacks complexity to adequately represent the patterns in the data. Overfitting happens when the model is overly complicated and begins to memorize the training data rather than acquiring knowledge from it. Tuning helps find the right balance.

How Does Hyperparameter Tuning Work?

Hyperparameter tuning is the process of trying different combinations of hyperparameter values and comparing their performance. The goal is to find the combination that gives the best results on your validation data, which is a separate set of data used to evaluate the model.

There are several ways to do this. One common method is to try all possible combinations in a structured way. This is known as grid search. Another approach is to try a random set of combinations, called random search. While grid search is more thorough, random search can often find good results faster when there are many options to explore. To excel in these methods, think about signing up for a Data Science Course in Delhi for practical experience and guidance from experts.

Choosing What to Tune

Not all hyperparameters need to be tuned every time. As a beginner, focus on tuning the most important ones that affect your model the most. For example, in a decision tree, important hyperparameters may consist of the tree's depth or the minimum amount of samples required to divide a node.

It helps to change one hyperparameter at a time to understand how it affects the results. Once you know which ones are most sensitive, you can test combinations of the top performers.

Optimizing hyperparameters is an essential phase in creating successful machine learning models. While it may sound complex at first, the basic idea is simple. You are trying different settings to see which one works best for your specific data and problem. To gain practical skills and expert guidance, consider joining a Data Science Course in Pune.

Start small, test one change at a time, and keep track of your results. Over time, you will develop an intuition for which hyperparameters matter most and how to tune them more efficiently.

Also check: Model Interpretability and Explainable AI (XAI)

mellowd

mellowd