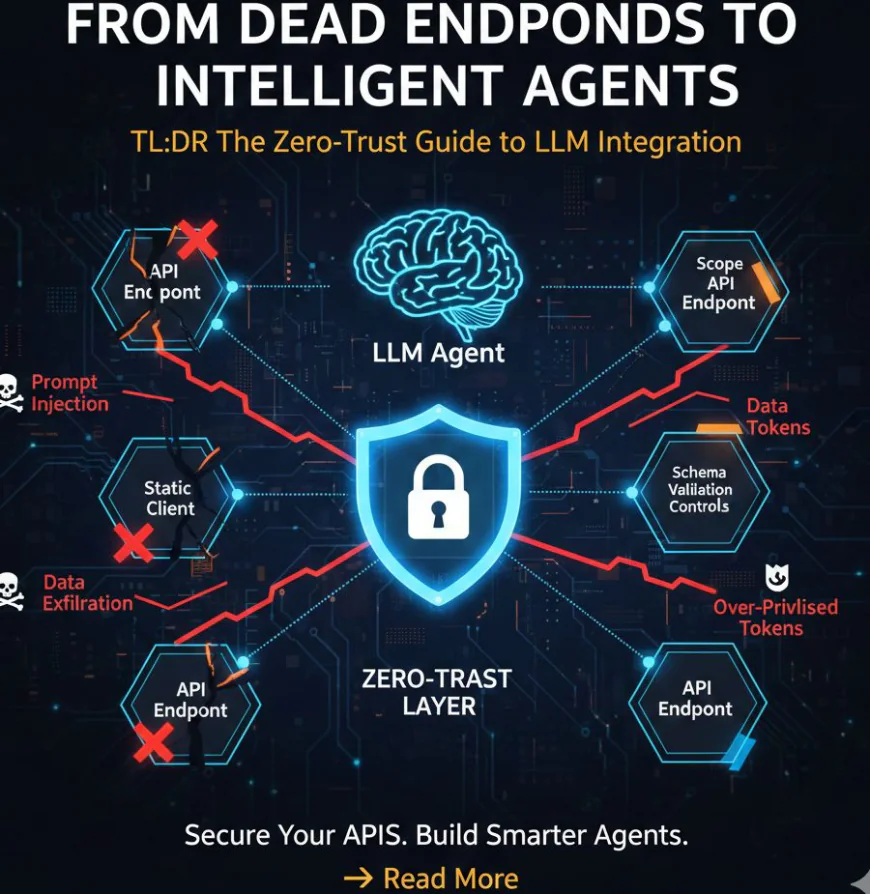

From Dead Endpoints to Intelligent Agents TL;DR The Zero-Trust Guide to LLM Integration

Traditional APIs were built for predictable clients and static requests. Large language models (LLMs) change that assumption completely.

The Problem with “Dead” Endpoints in an Agent-Driven World

For years, APIs were treated as passive endpoints. They waited for requests, validated a key, and returned data. This model worked because clients were known, behaviors were predictable, and inputs followed strict formats.

LLMs break all of those assumptions.

An LLM doesn’t just call APIs, it decides when, why, and how to call them. It may chain requests, infer parameters, or generate inputs that look valid but behave dangerously. Suddenly, APIs are no longer serving static clients; they are interacting with autonomous agents.

This shift turns traditional perimeter-based security into a liability.

TL;DR

Traditional APIs were built for predictable clients and static requests. Large language models (LLMs) change that assumption completely. When APIs are exposed to intelligent agents, every request becomes dynamic, contextual, and potentially risky. A zero-trust approach, combined with strict validation, scoped access, and runtime controls, is the only reliable way to integrate LLMs with APIs without breaking security.

Why Zero-Trust Is Mandatory for LLM Integrations

Zero-trust security assumes no request is trusted by default, even if it comes from an authenticated source. This principle is critical when working with LLMs, because:

- LLM outputs are probabilistic, not deterministic

- Prompts can be manipulated through indirect inputs

- Agents may over-request data or misuse permissions

- Context leakage can expose sensitive endpoints

Following modern LLM security guidelines means treating every API call as hostile until proven otherwise, regardless of whether it originates from your own application or an embedded agent.

Key Threats When Exposing APIs to LLMs

1. Prompt-Driven API Abuse

LLMs can be tricked into calling endpoints they shouldn’t access, especially if internal APIs are loosely documented or auto-discoverable.

2. Over-Privileged Tokens

Static API keys with broad permissions allow agents to read or write far more data than intended.

3. Input Hallucination

LLMs may generate syntactically valid but semantically dangerous parameters, leading to unexpected database queries or logic execution.

4. Silent Data Exfiltration

Because responses are fed back into the model context, sensitive data can be unintentionally reused or leaked across sessions.

Effective API threat mitigation requires addressing all of these risks at runtime, not just at authentication.

Zero-Trust Best Practices for LLM-API Integration

1. Scope Every API Call

Never expose full APIs to LLMs. Create agent-specific endpoints with minimal permissions and explicit schemas. Each endpoint should answer one question, nothing more.

2. Enforce Schema-Level Validation

Do not rely on the model to “do the right thing.” Validate every parameter against strict schemas, allowed ranges, and business rules before execution.

3. Use Short-Lived, Contextual Tokens

Replace static API keys with time-bound tokens that are scoped to:

- A single action

- A single user

- A single session

This dramatically reduces blast radius if an agent misbehaves.

4. Add Runtime Controls and Rate Limits

LLMs can loop, retry, or chain calls aggressively. Enforce:

- Per-agent rate limits

- Call depth limits

- Cost-based throttling

This is a core pillar of modern API threat mitigation strategies.

5. Log for Intent, Not Just Errors

Traditional logs track failures. LLM integrations must track intent:

- Why was this endpoint called?

- What context triggered it?

- Was the response reused elsewhere?

These insights are essential for debugging and security audits.

From APIs to Intelligent Interfaces

The goal is not to block LLMs, but to re-architect APIs as controlled interfaces for reasoning systems.

When done correctly:

- APIs become composable tools for agents

- Security becomes adaptive, not reactive

- Developers gain visibility into agent behavior

This shift aligns directly with emerging LLM security guidelines, where trust is continuously evaluated instead of assumed.

Frequently Asked Questions (FAQs)

Are traditional API gateways enough for LLM security?

No. Gateways handle authentication and rate limiting, but they don’t understand agent intent, prompt context, or semantic misuse.

Should LLMs ever access production APIs directly?

Only through controlled, scoped endpoints designed specifically for agent access, never through general-purpose APIs.

How often should agent permissions rotate?

Ideally per session or per task. Long-lived credentials significantly increase risk.

Can zero-trust slow down LLM workflows?

When designed correctly, no. Most validation and scoping happens automatically and adds negligible latency compared to model inference.

Is this approach only for large enterprises?

No. Even small teams integrating LLMs benefit from zero-trust patterns early, before insecure designs become hard to undo.

LLMs are powerful, but without the right security model, they can turn your APIs into attack surfaces overnight.

If you’re planning to expose APIs to intelligent agents, learn how to do it without sacrificing control, privacy, or reliability.

? Read the full guide here:

https://blog.apilayer.com/how-to-expose-apis-to-llms-without-breaking-security/

Build smarter agents. Secure your APIs. Start with zero trust.